Q: What is your new position, and what does it entail?

A: I recently joined Audix as vice president of sales and marketing. The marketing responsibility is global, but my sales focus is on the United States.

Q: How has your background prepared you for this?

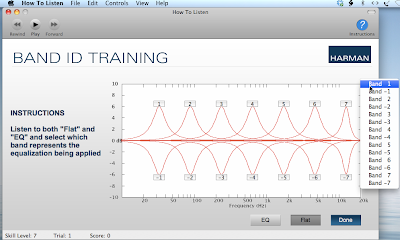

A: My first job for an audio manufacturer was at Shure, where I joined as the product line manager for wireless products in 1993. Since leaving Shure in 2003 as vice president of global marketing, I’ve worked in various marketing and product management capacities across the audio chain—from BSS and dbx signal processing at Harman to Electro-Voice loudspeakers at Bosch. Most recently, I was CEO at Community Professional Loudspeakers, where I had the pleasure of helping recapture the mojo of that American brand. Following Biamp’s acquisition of Community last summer, I decided it was time for a new challenge.

Joining Audix is a welcome return to my microphone roots. The microphone is the critical first link in the audio chain, so failure is not an option. I don’t believe you can “fix it in the mix.” For nearly two decades, the tagline at Audix has been “Performance Is Everything.” At face value, this appears to be simply a product performance message, but I also see it as a promise to the user and installer that it’s their performance that we are committed to capturing, faithfully and accurately.

While professional microphones have long been associated with the stage, studio or house of worship, it is exciting to see the same level of attention now being given to the front end of the audio chain in the corporate world. No amount of DSP will make up for a poor microphone choice. This is true whether on a video conference call in the executive board room or on a Zoom call when sheltering at home. Great audio—which all starts with selecting the right microphone—really does make a difference.

Q: What new marketing initiatives are we likely to see from the company?

A: While many know Audix for our iconic OM Series of handheld vocal microphones and D Series drum microphones, we are also a leading provider of installed microphones in the conferencing space with our M Series. We are competing against some of the giants of the industry, so we look for ways to speak directly and intimately to a broad range of customers across multiple product categories and applications. For this reason, online marketing—including social media—will continue to grow in importance in our marketing mix.

60 Seconds with Jonathan ‘JP’ Parker of Danley Sound Labs

Q: What are your short and long-term goals?

A: In the short term, I want to really understand everything that goes into the design and manufacture of an Audix product, what makes it special. I’m inspired by the level of vertical integration and supply chain control in our factory in Wilsonville, OR. Watching an aluminum rod being transformed on one of our state-of-the-art CNC machines into the housing of a D Series drum is something everyone should experience. Check out “Making of the Audix D6 Drum Microphone” on YouTube!

There is an extraordinary willingness at Audix to invest in U.S.-based manufacturing capability. As the leader of sales and marketing, my long-term goal is to demonstrate the wisdom of these investments by generating significant and profitable sales growth in the United States and beyond.

Q: What is the greatest challenge you face?

A: We launched several new products this year at NAMM, including a line of headphones and earphones. While Audix may be best known as a microphone brand, I believe our “Performance Is Everything” message is just as relevant in the listening category. My challenge will be demonstrating this to be the case. I enjoy a good challenge!

Audix • www.audiusa.com